Since 2018, The MITRE Corporation has been evaluating the performance of EDR-class security products based on the MITRE ATT&CK framework. This is the first comprehensive evaluation of its kind, not just analyzing malware detection levels but aiming to create a full picture of the ability of an EDR solution to handle all the stages of an advanced multi-stage attack. However, there are aspects of the evaluation process which, while not obvious, may need to be taken into account when using the results of this test to – for example - evaluate the current security levels of your organization, or to choose a new security solution. In this article, we aim to clarify some important aspects of the ATT&CK Evaluations.

What is MITRE ATT&CK

MITRE researchers collect a vast database of techniques used in targeted attacks worldwide. These techniques are analyzed, grouped and associated with adversaries (hacker groups) known for their implementation of certain techniques. Using this ATT&CK matrix, IT professionals can explore the visibility provided by an EDR solution – both in general and for specific applications. You can, for example, focus on those attacks most relevant to your area of business and see how your network is protected from these specific attacks with your current security solution. This is at present the only assessment framework of its kind able to offer these insights.

Before the evaluation process begins, those participating know the name of the adversary group whose typical activities will be emulated in the current test. The first round of MITRE Evaluations, carried out in 2018-2019, was based on the techniques of the APT3 group (aka Pirpi, UPS, Buckeye, Gothic Panda and TG-0110). Round two - in which Kaspersky took part – was conducted in 2019-2020, this time using typical attack techniques of APT29 (aka CozyDuke, Cozy Bear, The Dukes). It should be noted that the adversary emulated in the test may use many different implementations of the attack technique, so knowing the APT name doesn’t really provide any serious advantage to the participants.

Prior to the evaluation, MITRE invites participants to send in their own researches on the adversary and attacks chosen for the evaluation. It’s assumed that MITRE can use this data to enrich the ATT&CK database and to improve its emulation of the adversary for test purposes. As APT29 is well-known to Kaspersky experts, we contributed our own threat intelligence on this group to MITRE.

What are the limitations

MITRE methodology is pretty advanced: every targeted attack is imitated stage by stage, while the testers check how the security solution under evaluation collects the data and detects all those malicious activities. As one of the main features of an APT is secrecy, we could say that the main idea of an ATT&CK Evaluation is to test “the visibility of the invisible”.

But this leads to a key limitation: during the evaluation, the security product is not actually allowed to take any preventative/remediation actions at any stage of the attack. To comply with this rule, some vendors have had to develop a special ‘inform-only’ mode (so the product will only detect the attack) or just to turn off all the protection technologies that could block the attack.

Of course, this doesn’t reflect real-life conditions, where Prevention modules should stop the attack as soon as possible. However, this requirement is understandable, as the goal of the evaluation is to check the product’s visibility of all aspects of the attack matrix. If Prevention/Protection features are active and the attack is blocked at an early stage, it’s not possible to estimate how the security system would detect the subsequent tactics and techniques of the entire ‘attack chain’, and how it can correlate separate alarms and events into one security incident. Besides, if realism was maintained and each simulated attack was blocked if possible, the simulated adversary would have to start over and over again trying other methods, making the evaluation too long and too expensive (Test attacks are carried by MITRE experts manually, and it already takes three days to evaluate each product, with a large and growing list of vendors queuing to participate).

MITRE has, however, already responded to criticism about this shortcoming - the rules for Round 3 of the Evaluations should also take the protection capabilities of each product into account.

Another limitation of the current test worth mentioning - there’s no ‘background noise’. The test doesn’t emulate any legitimate applications or processes that have nothing to do with the attack. All the activity that the Blue Team (defense experts/vendors) can see on the protected endpoints is the malicious activity of the Red Team (emulated adversary/MITRE). This greatly simplifies the detection task. In real life, there’s always some form of legitimate activity taking place on the attacked computers, which leads to two additional requirements for a security solution: the ability to spot suspicious behavior among the ‘noise’ of legitimate events, and the minimizing of false positive rates.

The emulation of user activity would make the test more realistic. But again, we understand that even the current version of ATT&CK Evaluation wasn’t easy to put together, and we’re asking a great deal here.

How the detections are evaluated

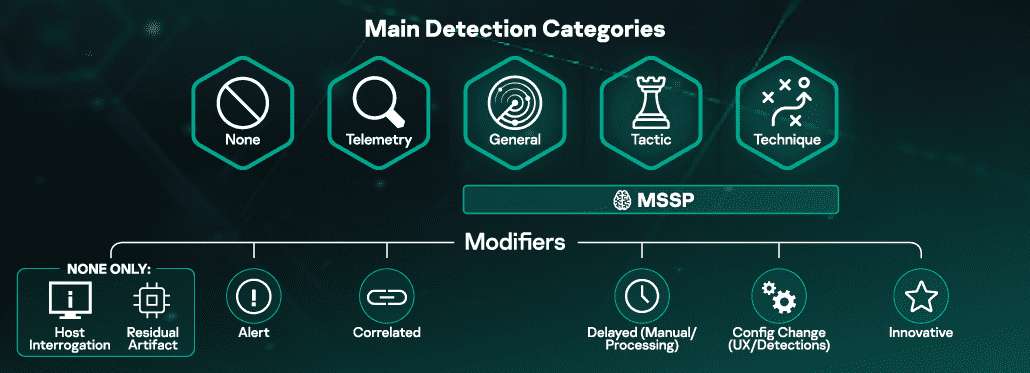

All the product’s reactions to the specific actions of the adversary are categorized, based on how the detection occurs. The main detection categories in Round 2 are:

None – the product didn’t notice the suspicious action. No data collected, no notifications issued.

Telemetry – some data about the event collected, but no complex logic applied, and no labeling of the event (malicious or not). Basically, there’s just an event log.

MSSP - detection is presented from a Managed Security Service Provider (MSSP) perspective, based on human analysis. An unlimited number of experts may participate remotely in this aspect of the test. During Kaspersky’s Round 2 evaluation, this element was handled by Kaspersky Managed Protection Service, which benefits from both automatic and manual detection. This form of ‘MSSP’-style human analysis won’t be included in the Round 3 of the Evaluation.

General – the detection data clearly shows that a suspicious event occurred, but the solution has not provided the details of the action (no tactics, no techniques).

Tactic – information on the potential intent of the suspicious activity (the stage of the attack) is provided.

Technique – the most advanced type of detection: the product shows what’s going on and how the attack is being conducted, mapping the attacker’s actions to the MITRE ATT&CK matrix.

In addition to these main categories, each detection could be marked with one or more Modifiers that provide more information about the detection. Here are some important Modifiers:

Delayed – means the detection didn’t come in the specified period defined as ‘real time‘, but arrived later, as a result of some subsequent or additional processing (for example, it was produced by a human expert from the MSSP service). The Delayed category is not applied to automated data ingestion or connectivity issues.

Configuration Change – this modifier is used when a vendor has had to tune the product after the start of the evaluation. By default, an out-of-the-box solution must be used in the test, with default settings. Any configuration changes done prior to the test must be reported and shown on a dedicated page of the MITRE site, so any customer could apply these to his copy of the product, in order to reproduce both the attack and the product response. However, if a product doesn’t see some events during the test, the vendor can obtain permission for additional tuning on day 3. If the product sees the event after this tuning, this detection is marked with Configuration Change modifier.

Another important modifier is Host Interrogation – this means that a detection happened only after additional data was manually pulled from the endpoint for analysis.

A description of further modifiers can be found on the MITRE site.

How to interpret the results

You can now see that the MITRE ATT&CK Evaluation generates a complex table of assessment of all the detections a given security solution has produced, for different stages of the attacks of a specific adversary. Yet there’s no scoring system in this test, and no comparison between different vendors (as happens in the ratings of anti-malware tests).

For example, some potential EDR purchasers may prefer a solution that generates more detections in the ‘Technique’ and ‘General’ categories rather than in ‘Telemetry'. But what if the detection is a false positive? If so, ‘Telemetry’ wins out - the administrator receives only the event data, with no incorrect conclusion about its malignance. However, in this case the customer will need additional resources to analyze the collected data.

Another controversial issue: should fully automatic detection by the local product be considered as a higher achievement than human-input detections from a remote MSSP service? At first glance, the idea of a totally autonomous security product sounds great, especially for those who can’t afford their own Threat Hunting teams. But the truth is, there are some attack techniques that still require human expertise to uncover: an advanced attacker can use legitimate tools and copy real sysadmins' behavior so that no anomalies would be detected by an automatic security system.

That's why it's important that ATT&CK Evaluation results are presented ‘as-is‘, so that customers can decide for themselves which product capabilities are important to their particular organizations’ security.

ATT&CK mapping in the products

In the wake of ATT&CK’s popularity, many security vendors have started to map the detections or the telemetry generated by their products to ATT&CK techniques, trying to make the maximum possible use of names from the matrix table. This may create the impression that a product ‘covers all MITRE listed attacks’. In fact, it’s just a marketing trick, and not a harmless one.

It’s basically incorrect to talk about ‘covering the technique’ with some product. Every ATT&CK technique could be implemented in many different ways. The security solution may see some of these implementations, but not all of them. So even if a vendor marks some technique as ’detected’ by the product in some promo, this doesn’t mean the product will be able to spot a different implementation of the same technique in a real attack. Mapping with ATT&CK techniques’ names doesn’t prove the product’s strength - it just provides some additional data to the security officers.

ATT&CK Evaluation provides a much better way to estimate the product’s real capabilities: by looking at the particular implementation of an attack technique, a genuine conclusion can be reached based on the product’s ability to detect the specific implementation.

On the other hand, with all the advantages of ATT&CK Evaluation, this shouldn’t be treated as ‘gold standard’. In addition to the limitations listed above, one must remember that the ATT&CK database contains only known implementations of known attack techniques. But as the database continues to be updated, no doubt the MITRE methodology will also grow and develop further.

Read about our visit to MITRE and the results of our participation in MITRE ATT&CK Evaluation in other posts of Kaspersky in MITRE ATT&CK