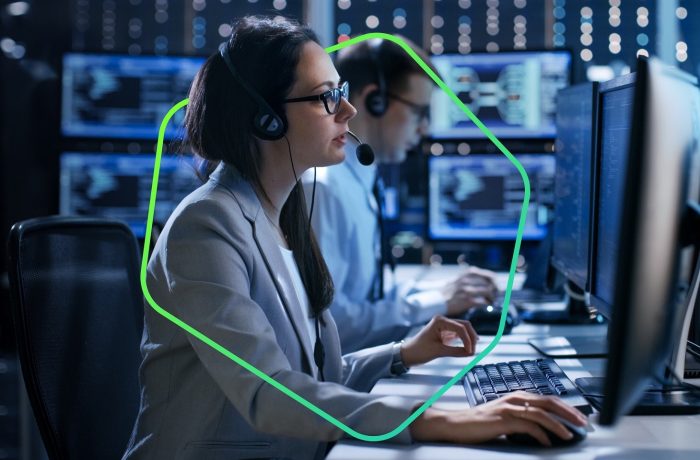

AI agents in your organization: managing the risks

The top-10 risks of deploying autonomous AI agents, and our mitigation recommendations.

11 articles

The top-10 risks of deploying autonomous AI agents, and our mitigation recommendations.

Researchers have discovered that styling prompts as poetry can significantly undermine the effectiveness of language models’ safety guardrails.

The Whisper Leak attack allows its perpetrator to guess the topic of your conversation with an AI assistant — without decrypting the traffic. We explore how this is possible, and what you can do to protect your AI chats.

We’re going bargain hunting in a new way: armed with AI. In this post: examples of effective prompts.

How malicious extensions can spoof AI sidebars in the Comet and Atlas browsers, intercept user queries, and manipulate model responses.

A comprehensive guide to configuring privacy and security in ChatGPT: data collection and usage, memory, Temporary Chats, connectors, and account security.

A close look at attacks on LLMs: from ChatGPT and Claude to Copilot and other AI-assistants that power popular apps.

A race between tech giants is unfolding before our very eyes. Who’ll be the first to transform the browser into an AI assistant app? As you test these new products, be sure to consider their enormous impact on security and privacy.

Most employees are already using personal LLM subscriptions for work tasks. How do you balance staying competitive with preventing data leaks?

Popular AI code assistants try to call non-existent libraries. But what happens if attackers actually create them?